SQLBits VI

On Friday I attended my 2nd SQLBits conference. This time it was hosted at the Church House Conference Centre, Westminster which was a good centre, with plenty of facilities and some beautiful rooms. It also benefitted from being just a 15 minute walk from Victoria.

The day started with a hot breakfast sandwich (bacon, sausage or egg) and tea or coffee, before the first session started at 9.20am. There were 4 streams of talks, and 3 different audiences - DBAs, Developers, or Business Intelligence users. There was always at least one talk for each audience going on at any one time giving a reasonable amount of choice. Only one talk had to be cancelled due to a speaker not being able to get to London because of the volcano and this was replaced with a panel session.

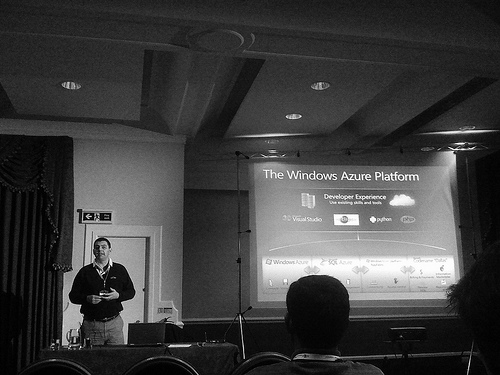

I chose to start by attending the talk

Getting started with SQL Azure by Dr Keith Burns, Data Architect, Microsoft UK (I can't find a website for Keith that has been recently updated - his blog seems to stop in August 2007 - if you know of one, please leave me a comment)

As I mentioned the other day, I am interested in finding out more about the Microsoft offering for cloud computing, so this was an obvious choice. As this was the first talk of the day, my notes are a little fragmented as I got my self into note taking mode, but my key takeaways were:

- Reporting and the BI tools aren't on the cloud yet, so you'd need to use a local version of these tools

- Availability is high - each database is in 3 places, in different scale units

- DB performance is affected by "neighbours" on the same machine. Load balancing is happening automatically but is not perfect yet

- Connections may get killed if it they are for long running transactions

- Updates to SQL Azure will be rolled out on a 2 monthly basis

- Databases can currently be 1GB, or 10GB. If you exceed this you get a nasty looking error message. If you currently have 1GB you can upgrade via an ALTER DATABASE statement. A 50GB offering is in the works as is spatial data

- Prices are in dollars, charged daily - so if you use it for one day you pay for one day - it works out that a 1GB database is approximately £72/year

Tea and pastries time before choosing a DBA talk (the developer one wasn't of real interest to me)

Inside the SQL Server Query optimizer by Conor Cunningham, Principal Architect, SQL Server Query Processor

This was a packed out talk, and I ended up sitting on the floor, as did several others.

I've never really known much about how the optimizer works, and I knew that this wasn't exactly going to be an introductory session, so I was somewhat concerned about how much of it I'd follow, and whether I'd actually gain anything out of it. What I learnt was:

- My maths isn't as good as it once was - I'm sure I'd have understood more whilst I was at university

- The aim from query optimisation is "good enough" - trying to find the best query plan would take too long so good enough will usually do

- Query optimizer is just a set theory engine

- Just indexing is usually insufficient - you want to evaluate whether an index is "covering" as a non covering index will typically need to do a fetch from the base table (SQL Server 2005 and beyond I believe)

- An index can be used to return data, not just for filtering or ordering - hence the use of covering indexes

- Missing index DMVs can suggest covering indexes

- Parallel queries are considered for "expensive" queries (> 5 seconds) but not all operators support parallelism and some scalar functions will prevent parallelism

- User Defined Functions don't have good histogram support - so don't get optimized well - this was picked up in greater detail in Simon Sabin's talk later in the day - so forward to the end of this post if you can't wait to find out

Another quick cup of tea before moving on to

Non-Functional Dependencies - A talktorial on getting performance and scalability right through proper Database Design and understanding Set and Relational theory by Tony Rogerson, SQL Server MVP. The slides for this talk are available here

A discussion on some of the things to be considered when using SQL. Amongst them were:

- a demo of the difference in performance around choosing the different kinds of surrogate keys - IDENTITY, NEWID and NEWSEQUENTIALID (which was new to me) - NEWID causes fragmentation of data and so affects performance, NEWSEQUENTIALID has the benefits of NEWID but without fragmentation

- normalisation - a quick tour through what normalisation is and why you'd use it - I was happy to discover that the majority of this was still tucked away in my memory despite it being about 18 years since I was first taught it in University algorithms lessons

- discussion of the use of NOLOCK (he doesn't like it) and the use of READ_COMMITED_SNAPSHOT (which seemed to have problems too and appeared to be able to break referential integrity) and I didn't come away with an answer of how to ensure that reading data didn't fail because of locks against the data

And then it was lunchtime - a nicely provided brown bag lunch featuring a sandwich, bag of crisp, impossible to peel orange, a muffin and a carton of fruit juice - and time to sit outside in Deans Yard, watching people playing football on the lawn and trying to get my brain to rapidly process everything I'd heard during the morning to allow me to prepare for an afternoon featuring more of the same. The key sponsors had all been given an opportunity to display their wares during a lunchtime spot, so I stopped by to hear about

Redgate's new product SQL Source Control

I've long been a fan of the SQL Compare tool so it seemed obvious to hear about the new product. This demo seemed to fit in every one of their SQL related tools which meant I wish I'd made a Redgate Product Bingo card. The premise of the tool sounds good, integrating into existing source control tools - SVN and TFS at the moment. They also brought my attention to a free tool SQL Search which I'll be downloading and installing when I'm back in the office as it looks like it'll save me querying the INFORMATION_SCHEMA views quite so often. Version 1 of the SQL Source Control tool doesn't manage data, which means that when you add a column that you need to be NOT NULL, you can't specify how that column should be populated for existing data - which means you get an error. It appears that this might be a good solution whilst in early days development, but not so useful for live systems. Deployment is via the following steps:

- get latest of scripts

- compare scripts to backup (via SQL Compare)

- let SQL Compare generate update scripts

Redgate are running an early access program at the moment allowing you to get hold of the tool prior to its actual release.

I had a few minutes here before the next session, although there was no tea to be found before moving on to

Denormalisation - having your cake and eating it by David Whitehorn and Yasmeen Ahmad

Another packed out session

This was quite a theoretical discussion, rather than explaining any tips and tricks on how to achieve your denormalisation. It also covered a lot of the same normalisation content that Tony had covered before lunch. Mainly stating that normalisation is good, but sometimes denormalization is good too - for ease of use, or reporting purposes. Normalisation guarantees good design but only forms part of it and when you normalise you remove a lot of insert, update, delete anomalies but you don't guarantee it which then means using triggers etc to ensure there are no anomalies whatsoever. Normalisation also comes with some performance overheads which may be removed using denormalisation.

Possible methods for denormalisation are:

- Duplicated data

- Splitting tables

- Using derived columns - store calculated values

Basically, the decision as to whether to normalise or denormalise depends what you're going to do with the data.

Time for tea and donuts before the next session

Improving database performance by removing the database by Simon Munro

This talk was about the concept of database less systems - it was pretty well attended which surprised me at a SQL conference. One of the first things Simon said was

This talk is not about NoSQL. It is about why NoSQL won't die

Which was a bit of a shame as I was quite keen to learn about NoSQL. However, he did point us in the direction of this video from the last SQLBits conference.

Scalability is often mentioned as the reason why SQL isn't a good solution - but actually, NoSQL isn't going to be any better. Scalability is about more than just this. He recommended a book The Art of Scalability which gives guidance about the scalability of everything - hardware, software, processes, architectures etc

Some of the takeaway points for me were:

- Before we change something, to make an effective change we need to understand why it is like it is in the first place

- Data is temporal - it's value changes over time

- Never forget that people create a lot of spreadsheets to mould data how they like it - so your database isn't likely to be the only place where data is stored no matter how much you want to believe it is

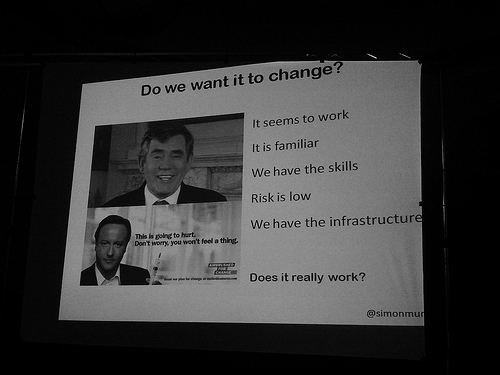

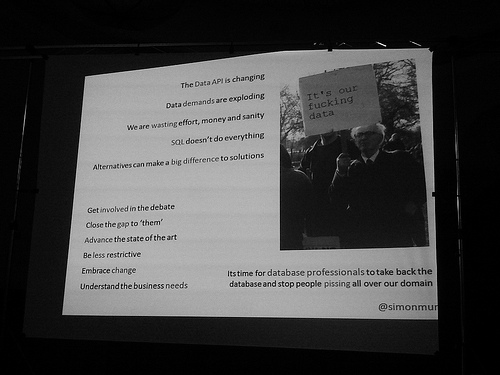

Simon had some of the best slide decks I saw on the day, I particularly liked this one

and this one

This was probably the most interesting and engaging of the talks I went to, and it challenged me to go away and think more about some of his points.

The final break of the day before the final talk which for me was

High Performance Functions by Simon Sabin

I'd seen Simon present on a couple of previous occasions so I already knew that he'd be a good way to end the day. And he was. This followed on nicely from Conor's earlier stuff about scalar valued User Defined Functions not being optimised properly and gave some demonstrations to prove it - basically the query plan showed lots of nested loops, which indicate that each scalar function believes itself to return only one row. Simon acknowledged the reason that UDFs, when they were first created, were welcomed by the developer community was because of the help they gave to code reuse - something that I'm sure we all strive for. The best workaround presented was to use query hints - hash /merge - to help query plan generation, where hash join is the safest option when there is no option but to use a scalar function.

But an alternative, at least in SQL 2008, is to use Inline table valued functions, mainly because:

- the function returns a query

- they work a bit like a parameterized view

- the query is consumed into main query for query plan because they are resolved down to base functions

Another alternative is to use the CLR as an alternative approach - the more complex the operation, the more chance that the CLR will perform better than SQL.

Simon's summary was:- Don't use scalar fns

- Do convert to inline table fns

- Do consider using CLR

And that was the end of SQLBits VI - the evening continued with beer, pizza and games at the conference centre, but I sloped off home with a brain full of information to process and a can of coke for my journey. Another excellent SQLBits.